datatool¶

Implements a general purpose data loader for python non-sequential machine learning tasks. Given a directory path the data loader will read data files (in commonly found formats) and return a python object containing the data in the form of numpy matrices, along with some supporting functions for manipulating the data. The data loader supports the loading of data sets containing multiple feature inputs, and multiple target labels.

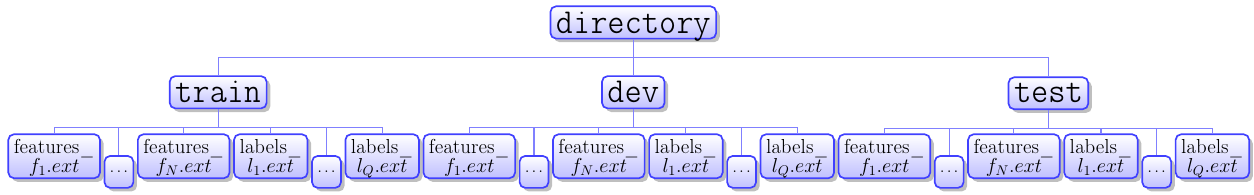

Directory Structure¶

directory at the top level can be named whatever. There must be three directories below directory named train, dev, and test. These names are not contingent.

If the train, dev, and test directories are not present Bad_directory_structure_error will be raised during loading. The top level directory may contain other files besides these three directories. According to the diagram:

N is the number of feature sets. Not to be confused with the number of elements in a feature vector for a particular feature set. Q is the number of label sets. Not to be confused with the number of elements in a label vector for a particular label set. The hash for a matrix in aDataSet.featuresattribute is whatever is between features_ and the file extension (.ext) in the file name. The hash for a matrix in aDataSet.labelsattribute is whatever is between labels_ and the file extension (.ext) in the file name.

Notes¶

Rows of feature and data matrices should correspond to individual data points as opposed to the transpose. There should be the same number of data points in each file of the train directory, and the same is true for the dev and test directories. The number of data points can of course vary between dev, train, and test directories.

Supported Formats¶

- .mat:

- Matlab files of matrices made with the matlab save command. Saved matrices to be read must be named data.

- .sparsetxt

- Plain text files where lines correspond to an entry in a matrix where a line consists of values i j k, so a matrix A is constructed where \(A_{ij} = k\). Tokens must be whitespace delimited.

- .densetxt:

- Plain text files with a matrix represented in standard form. Tokens must be whitespace delimited.

- .sparse:

- Like

.sparsetxtfiles but written in binary (no delimiters) to save disk space and speed file i/o. Matrix dimensions are contained in the first bytes of the file.- .binary:

- Like

.densetxtfiles but written in binary (no delimiters) to save disk space and speed file i/o. Matrix dimensions are contained in the first bytes of the file.

Possible Extensions¶

- Data transformations

- Mean Cancellation, KL-Expansion, Covariance Equalization, Data Whitening, shift labels to avoid asymptotes of logistic.

- Feed

- Advanced shuffling, variable batch size.

- Data sets

- Support for sequential and tensor data.

loader¶

-

exception

loader.Bad_directory_structure_error¶ Raised when a data directory specified does not contain subfolders named train, dev, and test. Any of these directories could be empty and the loader will hand back a

DataSetobject containing no data which corresponds to the empty folder.

-

class

loader.DataSet(features, labels, num_data_points)¶ -

epochs_completed¶ Number of epochs the data has been used to train with.

-

features¶ A hashmap (dictionary) of matrices from files with a features_ prefix. Keys are derived from what’s between features_ and the file extension in the filename, e.g. the key to a matrix read from a data file named: features_descriptor.ext is the string ‘descriptor’.

-

index_in_epoch¶ The number of datapoints that have been trained on in a particular epoch.

-

labels¶ A hashmap (dictionary) of matrices from files with a labels_ prefix. Keys are derived from what’s between labels_ and the file extension in the filename, e.g. the key to a matrix read from a data file named: labels_descriptor.ext is the string ‘descriptor’.

-

next_batch(batch_size)¶ Parameters: batch_size – int Returns: A DataSetobject with the next batch_size examples.If batch-size is greater than the number of data points in the the data stream a python assert fails and the loader stops. If num_examples is greater than the number of examples left in the epoch then all the matrices in the data stream are shuffled and a

DataSetobject containing the first num_examples rows of the shuffled feature matrices and label matrices is returned.

-

-

class

loader.DataSets(train, dev, test)¶ A record of DataSet objects.

-

exception

loader.Mat_format_error¶ Raised if the .mat file being read does not contain a variable named data.

-

exception

loader.Mismatched_data_error¶ Raised if there is a mismatch in the number of rows between two matrices of a

DataSetobject. The number of rows is the number of data points, or examples, and this loader assumes that each example will have each feature set and each label set. If you have missing labels or missing features for a particular example they may be substituted with some appropriate sentinel value.

-

exception

loader.Missing_data_error¶ Raised if there is a features file without an accompanying labels file or vice-versa found in the directory.

-

exception

loader.Sparse_format_error¶ Raised when reading a plain text file with .sparsetxt extension and there are not three entries per line.

-

exception

loader.Unsupported_format_error¶ Raised when a file with name beginning labels_ or features_ is encountered without one of the supported file extensions. It is okay to have other files types in your directory as long as their names don’t begin with labels_ or features_.

-

loader.center(A)¶ Parameters: A – Matrix to center about its mean. Returns: Void (matrix is centered in place).

-

loader.display(datafolder)¶ Parameters: datafolder – Root folder of matrices in .sparse, .binary, .densetxt, .sparsetxt, or .mat format Returns: Void (Prints dimensions and hash names of matrices in dataset Calls

read_data_setsand prints the dimensions and keys of the feature and label matrices in your train, dev, and test sets.

-

loader.import_data(filename)¶ Parameters: filename – A file of an accepted format representing a matrix. Returns: A numpy matrix or scipy sparse csr_matrix. Decides how to load data into python matrices by file extension. Raises

Unsupported_format_errorif extension is not one of the supported extensions (mat, sparse, binary, sparsetxt, densetxt). Data contained in .mat files should be saved in a matrix named data.

-

loader.matload(filename)¶ Parameters: filename – file from which to read. Returns: the matrix which has been read. Reads in a dense matrix from binary file filename.

-

loader.read_data_sets(directory)¶ Parameters: directory – Root directory containing train, test, and dev data folders. Returns: A DataSetsobject.Constructs a

DataSetsobject from files in folder directory.

-

loader.read_int64(file_obj)¶ Parameters: file_obj – The open file object from which to read. Returns: The eight bytes read from the file object interpreted as a long int. Reads an 8 byte binary integer from a file.

-

loader.smatload(filename)¶ Parameters: filename – The file from which to read. Returns: A dense matrix created from the sparse data. Reads in a sparse matrix from binary file.

-

loader.toIndex(A)¶ Parameters: A – A matrix of one hot row vectors. Returns: The hot indices.